Patients’ trust and AI adoption in healthcare.

This research investigates how trust formation influences appropriate reliance in AI generated diagnoses, and how explainability and depth of information can influence (or not) patients' trust.

Impact. Delivered 2 controlled experiments with 156 participants, statistical analysis (mixed ANOVAs) and experimental design methodologies. Identified opportunities to encourage trust formation in AI healthcare services.

My role. Behavioural research, experimental design, data gathering, statistical analysis.

The healthcare AI trust challenge.

AI can detect cancer 29% more effectively than radiologists, with the potential of increasing life saving timely interventions. However, healthcare organisations are focusing on layering technology onto complex infrastructures, sometimes forgetting that if patients don't trust the technology, adoption fails and investments are wasted.

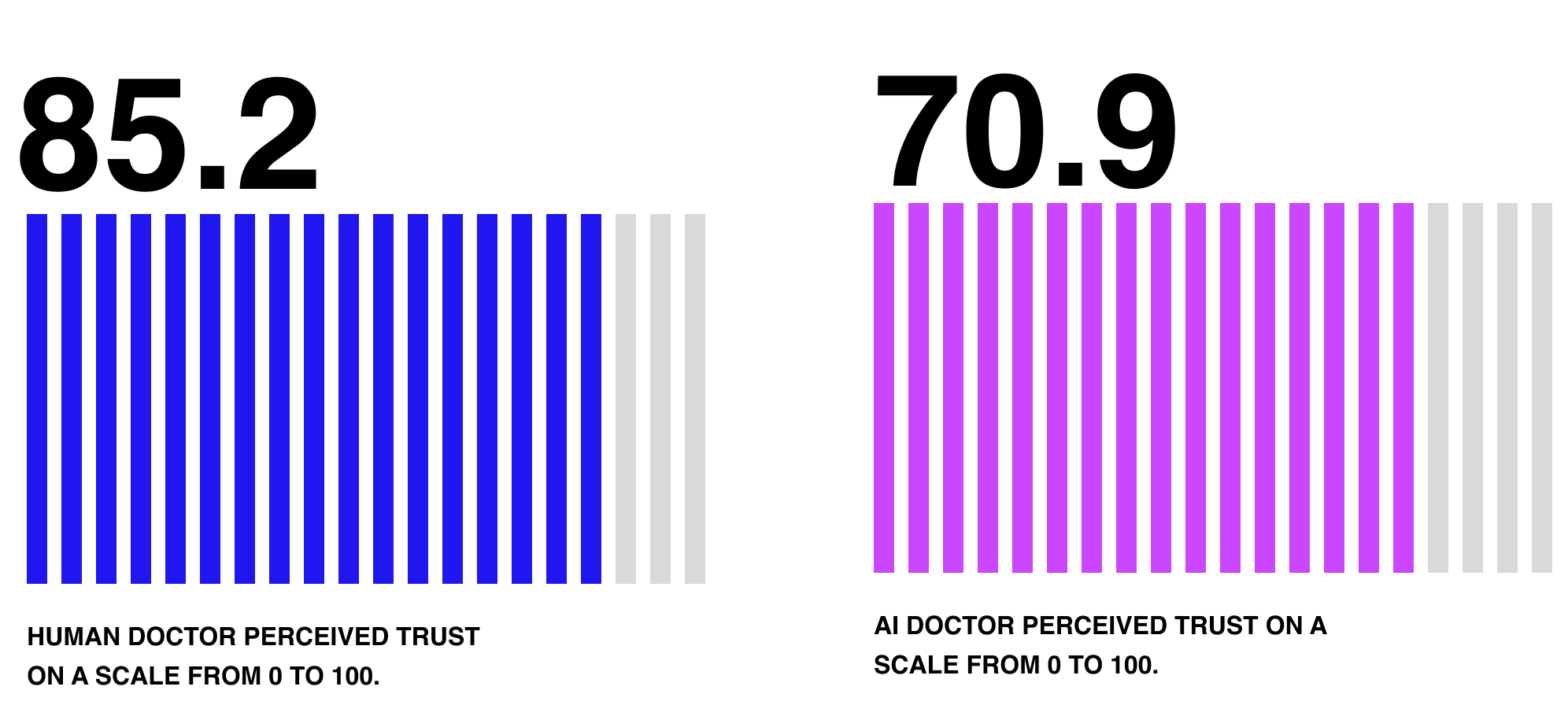

This experimental study I ran at UCL (N = 156) suggests that patients consistently trust identical diagnoses 15 points less when delivered by AI versus human doctors. This trust gap is a significant business-critical barrier that determines whether AI investments succeed or become expensive shelf ware.

Main opportunities.

01.

Balance systemic trust with cognitive and emotional trust, maintaining strong institutional trust (in the healthcare system) while preserving interpersonal trust (between patients and clinicians), using AI to augment rather than replace human touch points.

✦ Create transparent, patient first AI governance structures.

✦ Design hybrid consultation workflows, where human - AI teams collaborate in partnership.

✦ Treat AI as clinical tool, not clinical actor.

02.

Prioritise simplicity, providing tailored information to patients, prioritising simple communication in high-stakes decisions.

✦ Include progressive disclosure of diagnostic information where possible, giving action driven information first, and details later.

✦ Develop trust recovery mechanisms, with clear pathways for patients to request second opinions to a human doctor.

✦ Build explanation hierarchy into AI systems, designing adaptable AI behaviours tailored to patients and healthcare providers.

03.

Continue to favour transparent, human first AI integration, maintaining clinicians as the primary decision-makers and patient-facing authorities.

✦ Train clinicians to clearly communicate opportunities and limitations in AI generated diagnoses.

✦ Focus on clinician - AI collaboration workflows, identifying critical collaborative intersections.

✦ Clearly define human accountability structures, keeping clinicians as the responsible parties for all diagnostic and treatment decisions.

Understanding trust formation.

It is believed that a patient's success rate in following a therapy is directly associated with the trust developed with a physician. However, the introduction of AI systems in healthcare is challenging this dynamic, presenting the opportunity to assist patients when a doctor isn't available, but also facing significant adoption challenges.

In order to understand how AI systems can be integrated into existing health services, I designed 2 psychological experiments to understand how trust is formed towards AI-generated diagnoses, when they are delivered by the AI system itself versus when they are delivered by a human doctor.

Experiment 1.

Research Question. How does the complexity of diagnostic explanations affect patient trust in AI-generated medical diagnoses?

Prediction. An extended explanation of the diagnosis increases perceived trust.

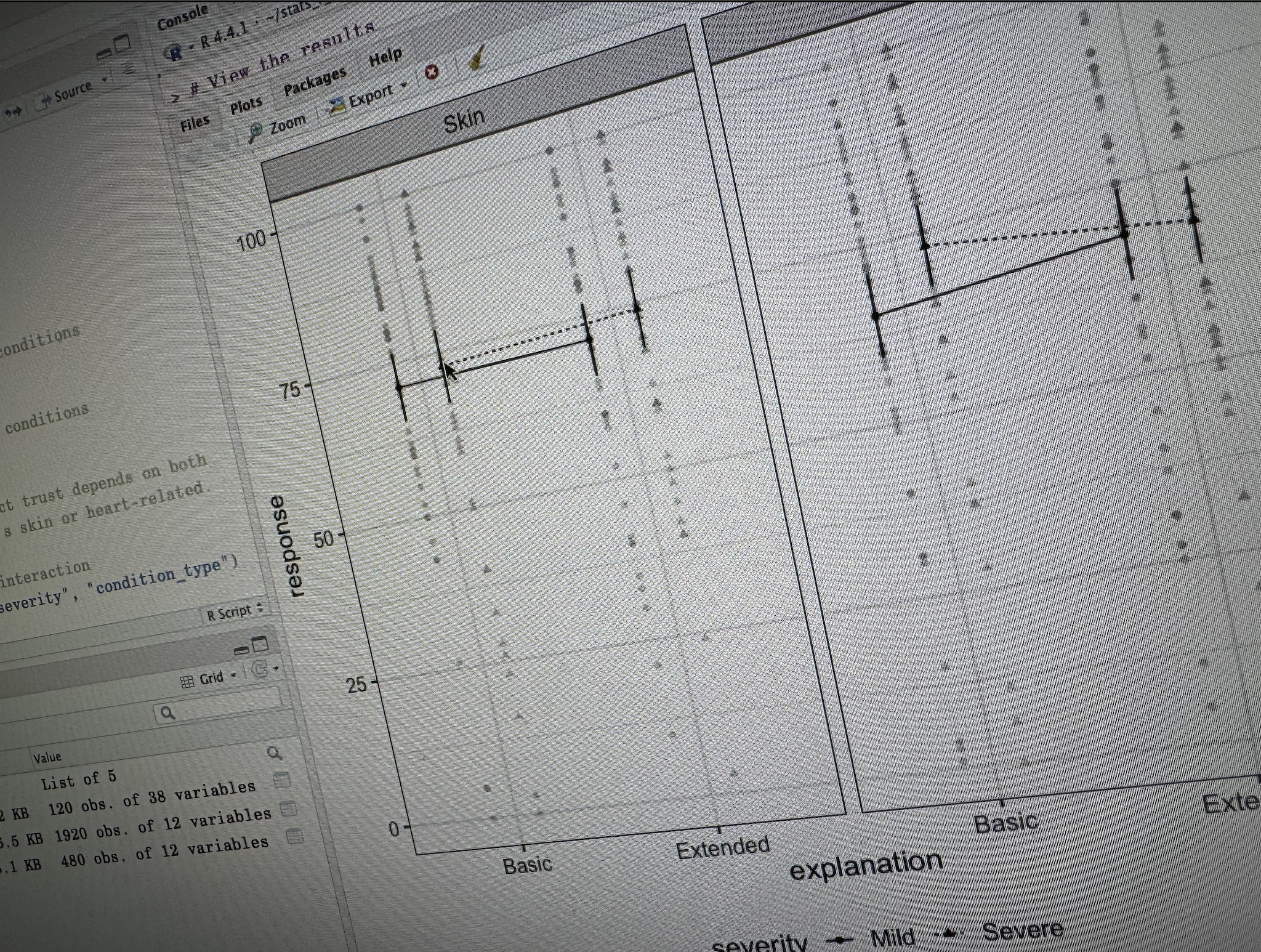

Design. 109 participants recruited on Testable Minds (120 initially, then 11 removed from the study) evaluated medical scenarios across cardiac and dermatological conditions with varying severity levels. Participants were randomly assigned to receive either basic explanations (high-level diagnostic information) or extended explanations (comprehensive technical details including medical terminology and diagnostic reasoning).

Ethical concerns. Participants with a history in anxiety disorders or hypochondria were screened out with an initial questionnaire. All participants’ data were treated following GDPR regulations and were informed about the aims of the study.

Key findings.

Explanation depth had minimal overall impact. No significant main effect of explanation depth (p = 0.908), meaning detailed vs basic explanations didn't generally improve trust across conditions.

Basic explanations worked better for severe cardiac conditions. Significant interaction between explanation depth and severity for cardiac conditions (p = 0.012), with participants showing higher trust for severe cardiac cases when given basic rather than extended explanations (p = 0.002).

Medical condition type influenced trust formation. Dermatological conditions consistently scored higher trust than cardiac conditions across all explanation types.

The human-AI trust gap persisted regardless. Participants consistently rated human-delivered diagnoses 15+ points higher than AI-delivered diagnoses (87.0 vs. 71.5), regardless of explanation depth or medical condition type.

Experiment 2.

Research Question. How does the timing of diagnostic information presentation influence patient trust and decision-making?

Prediction. A step by step progressive disclosure of information increases perceived trust.

Design. 47 participants recruited on Testable Minds (50 initially, then 3 removed from the study) experienced 2 different scenarios, one for a mild and another one for a severe cardiac diagnosis, with a treatment. The diagnostic information was shared in 3 different temporal sequences: diagnosis before treatment recommendations, integrated step-by-step disclosure, or treatment recommendations presented before detailed diagnostic explanations.

Ethical concerns. Participants with a history in anxiety disorders or hypochondria were screened out with an initial questionnaire. All participants’ data were treated following GDPR regulations and were informed about the aims of the study.

Key findings.

Diagnosis timing did not influence the overall perceived trust in AI. No significant main effects were found for diagnosis timing (F(2, 44) = 0.64, p = 0.533), severity of the condition (F(1, 44) = 0.00, p = 0.994), or their interaction (F(2, 44) = 0.66, p = 0.523) across all dependent variables.

The human-AI trust gap remained consistent. Significant main effect of doctor type (F(1, 44) = 26.97, p < 0.001), with participants showing significantly lower trust in AI delivered diagnoses (M = 70.2) compared to human doctor delivered diagnoses (M = 83.4).

Receiving the treatment before the diagnosis was favoured. Descriptive patterns consistently favoured the Post-diagnosis condition across all dependent variables, with the highest trust scores for both mild and severe conditions.

Participants understood equally the process used by the AI system in formulating the diagnosis. No significant differences in perceived understanding across timing conditions (F(2, 44) = 0.12, p = 0.886), indicating that when information is presented doesn't affect comprehension of how the AI reached its diagnosis.